This is an edited and slightly expanded transcript of a lightning talk I gave for JHUG's virtual meetup.

Throughout my years as a software engineer, I was fortunate enough to join several different teams in various companies. Each of these had different development processes and approaches when it comes to building software, but they had one thing in common: Code Reviews.

Each team used code reviews for different reasons. In some cases, a code review was a way to find defects before pushing something to production. In other cases, they acted as a knowledge sharing tool. There were teams that used code reviews as historical reference.

Regardless of how you use it though, there is one thing that is true in every project I've seen so far:

Nobody likes code reviews!

Code authors hate code reviews. They spend time writing all this code. And after all their hard work, they need to submit a code review and ask their teammates to spot all the errors and mention all the negative things they might have done.

On the other side, code reviewers also hate code reviews. They are working on some piece of code themselves when someone asks them for a review. They hate all this context switching, they want to focus on what they're currently working and not spend time looking at other people's code and have arguments about the best way to implement something.

So, a code review is a process that by nature results in conflict.

However, there are certain things that in my experience can make a huge difference and make the whole process less painful.

Code authors

Let's start with what the code authors can do to ease the process for the reviewers.

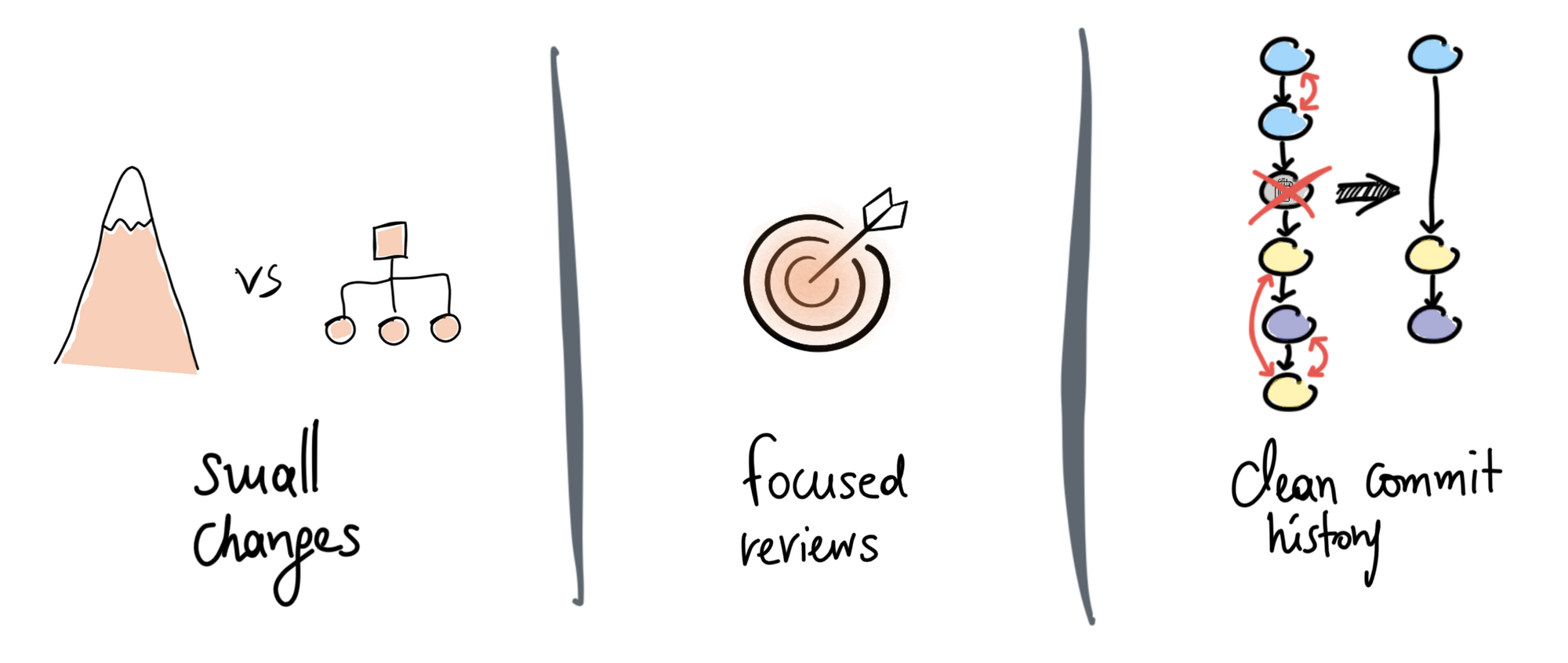

Keep it short & sweet

Imagine yourself having to choose a new book to read.

Would you choose a book that has an enormous amount of pages or a book with reasonable size? Well, the reasonable size of course, it's much easier to read.

Many times, in order to develop a feature, we might have to change quite a lot of the existing code. We might have to add modules, implement new services, alter schema tables, etc.

However, the more changes we do, the harder it is for a reviewer to spot problems we might have missed. Always favour splitting a feature into smaller code reviews rather than include all the changes into a single one. A good rule to have is that a PR (pull request) shouldn't have more than 500 lines of code changes.

Back to the book analogy...let's say you have a choice between a book that talks about philosophy, politics, epic battles, music, art, mathematics, etc. And then you have another book that focuses only on one of these subjects. Which one is easier to read?

As code authors, we tend to massively expand the scope of our changes...it's very easy to get carried away when we work. How many times did you have to work on a feature and you ended up also fixing 2-3 bugs, along with a major refactoring?

This can be very confusing for a reviewer as he has to context switch between all the different things that your code have changed. Try to create focused reviews instead. As with code, high cohesion is preferable.

Finally, imagine a book that has all its chapters mixed and another one that has a logical continuation. Which one is easier to read?

In code reviews, these chapters are our commits. And your commit history should tell a comprehensive story. Of course, this is quite difficult to do, as we don't necessarily know from the beginning where some code changes might lead us.

Interactive rebase is your friend. Once you're satisfied with your changes, try to formulate your commit history in a way that would make the reader understand the journey you went through. Squash and edit commits, reword your commit messages, etc.

Give context

Every code review needs to have a descriptive title that when a reviewer reads it, he/she immediately understands what these code changes are all about. If you are also using some issue tracking tool, it's worth including the issue number in the title as well.

In addition to the title, a code review should include a detailed description. Some things that are worth including in this description are:

- Overview (as bullet-points) of the changes

- Reasoning behind certain design choices

- Potential problems that you had to go through that might help reviewers better understand your approach

- Things that are missing or that you intentionally left out

- Links to resources that could help the reviewers, e.g. link to the issue ticket that this code changes try to address

Finally, it's very useful to annotate with comments all the places in your code review that might require clarification. This really helps the reviewers to avoid going through the same thought-process that the author went through and avoid asking the same questions.

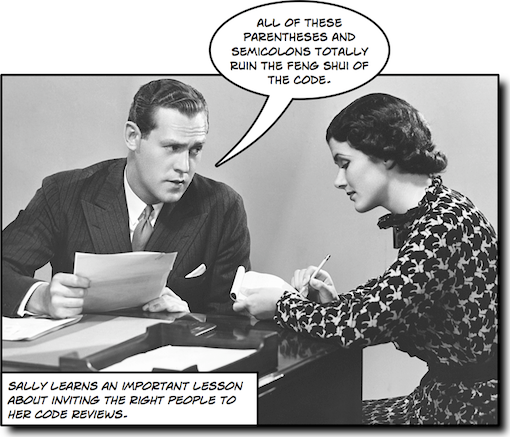

Choose your audience

Usually, when it comes to code reviews, the author must choose certain people from the team to review his code.

It's a good practice to include someone who is familiar with the area of code that you changed, for example the person who was the last one to modify it.

In addition to that, it's also useful to include someone unfamiliar with that particular code. The benefit is that he/she will manage to look at it with completely fresh eyes and might uncover problems or enhancements that you might not have thought about.

Finally, always make sure to include more people than the required number of reviews. For example, if your team's policy is to have 2 reviews, include at least 3-4 people. This allows the whole process to be more efficient as it takes into account that some of your team-members might be too busy or too distracted and others can take their place.

Code reviewers

Now, let's have a look at what the reviewers should do when they receive a code review request.

Tests as important as production code

What's the best way to ensure that a piece of code is working as you expect? Well, testing of course!

A code review should always contain the necessary tests to back up the actual logic changes. This means that not only there should be tests, but they should be reviewed as well.

Some things you should be looking in the tests:

- Are the tests readable?

- Do they cover all edge cases?

- Are the tests overly complicated?

- Are the tests testing exactly what we need?

- Is there any room for DRYing them up?

Tooling is important

Code reviews contain code. So, as you would use tools (e.g. IDEs) to write code easier, you should do the same with reviews.

I've seen so many people trying to use GitHub's browser-UI to review code changes. So they have to go up and down, trying to piece all the information together, trying to find usages using the browser's search functionality, etc. This is so difficult and inefficient.

It's also very easy to miss problems like that. For example, GitHub shows you only the code that has changed. But many times, you might want to also see the code that hasn't changed...for example, there might be duplication or you might have made a method obsolete, etc.

Here are a few tools that might help you with reviewing code:

- CodeStream: Plugin that allows you to do in-IDE code reviews. It is available for all major IDEs out there, i.e. IntelliJ, VSCode, Atom, Visual Studio. The downside of this one is that it requires read access to your GitHub repository, and your company might have strict rules about that.

- UpSource: This is a JetBrains product, so only available for IntelliJ. Also, it requires a JetBrains license (although at the moment it is free for up to 10 people). However, if your company is already using JetBrains products, you might want to go with that.

- Octotree: If you don't want an IDE-based solution, you could try a browser plugin. It doesn't give you all the benefits of an IDE of course, but at least it makes the review process a bit easier.

- Codespaces: GitHub recently released a browser-based IDE. Unfortunately, at the moment, there is limited access to this feature, so I personally haven't tried it. However, I can see how something like that might help with code reviews as well.

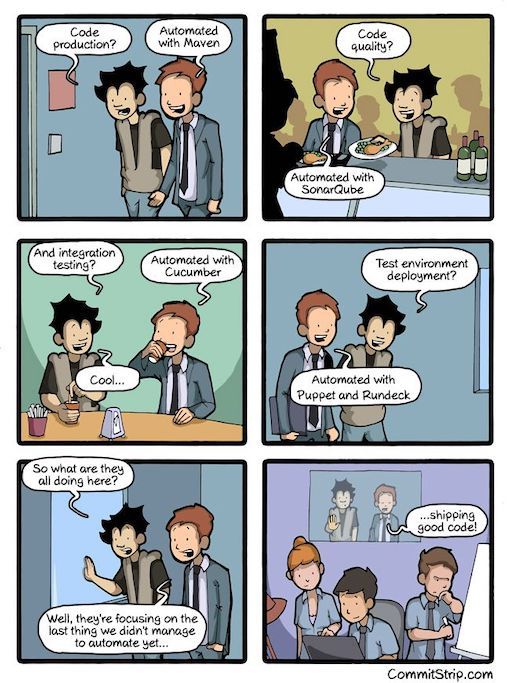

Focus on design & logic

One of the things I hate in code reviews are comments like The indentation is not correct here, or This variable is not used.

Human reviewers should focus on everything that cannot be automated. This basically means logic and design. Making sure that the logic is sound, the design makes sense and is using the correct abstractions, the code is reusable and maintainable.

Everything else should be automated and should be checked in your CI pipeline. Some of the things you should be automating are:

- Formatting

- Static code analysis with predefined styling rules

- Static code analysis for common bugs

- Code coverage

- Checking project external dependencies

Pick your battles

There are so many things to look in a code review. Is the logic correct? Is the design abiding to the SOLID principles? Design patterns? Performance? How about security? Is the solution over-engineered? Is it following the team's and company's agreed practices?

In addition to all the above, you need to keep in mind that different team members might have different interpretations or trade-offs of these. You might want to sacrifice some of your performance in favour of readability. You might not want to use a design pattern at this point to avoid premature optimisations.

So, to avoid making a code review drag for a long time and also maintain the good dynamics of the team, you should pick your battles. Nobody likes someone who leaves comments for every single problem he finds in a code review. Make sure you only mention problems that are very important to you and leave the rest.

Wear the "No, but..." hat

Once you find a problem that you want fixed, avoid just saying No, this won't work. Chances are that the author had something else in mind, so just shooting down his/her ideas is a recipe for disappointment and .

Instead, try to explain why something might not work or it can be written in a better way. Give concrete reasons.

Also, try to give solutions not only focusing on the problems. Be constructive. In general, it's good to have the "Yes, and ...." and "No, but ...." mentality.

Avoid long threads & flame wars

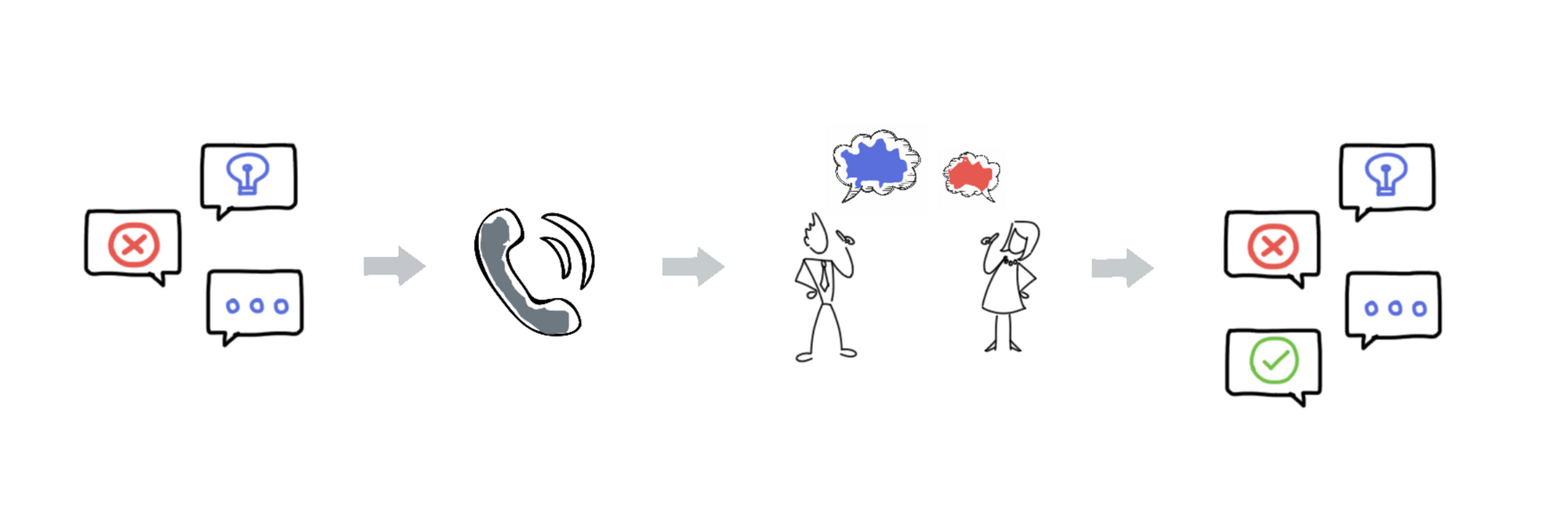

There are times where both sides, the author and the reviewer, have conflicting thoughts about a certain approach. This can lead to long threads of comments, and heated arguments, each side arguing why their approach is better. This promotes long-lasting code reviews and negativity in general.

Instead, what I've seen working much better is, in the first sign of a long conflict, to pick up the phone and talk to the other person directly. This makes the whole process much faster as it makes the communication synchronous.

In addition to that, it makes the conflict resolution much easier. When you speak to the other person directly, you have more empathy and there are less chances you will be as judgemental and hurtful as you might have been over asynchronous comments.

One important step in this process, is to update the code review with a summary of your discussion. This way, other people are aware of what happened and what was agreed.

Praise goes a long way

Finally, be positive! Always try to find something positive to say, celebrate your successes as a team. You cannot imagine how important this is.

As developers, we have big egos...we are very proud of the code we're writing and we like to share it with our peers. So, congratulating a code author for something he/she wrote or a design decision he/she took can go a long way!